I’ve said about social media type platforms – if you are making an informed decision to trade privacy for convenience / information / entertainment / comradery … if you feel that you are getting a good “deal” in that trade? Then social media type platforms are awesome. If you only think you are getting something in the deal – unaware of what the platform owners are getting from you – then I find that problematic.

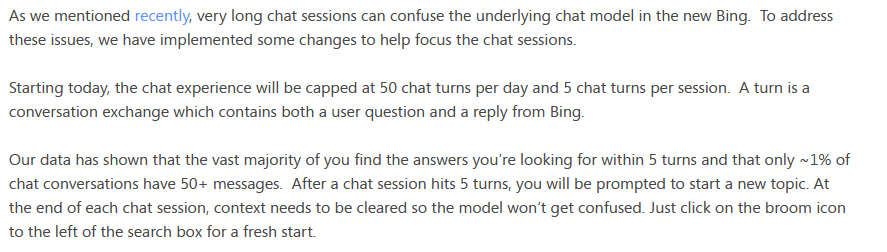

The same is true for the public AI models – some of what they are getting from you is just language training & beta testing. If I had a dozen people type questions, I have not gotten a robustly representative sample of how ‘people’ talk. Getting a few million people to provide samples of their linguistic quirks is absolutely an important part of producing universally useful natural language processing. The models are also asking you to flag anything odd / wrong / unsettling. Again, this is the public providing numbers to testing that has already been done.

But … if an AI is being trained by inputs, information you provide is being incorporated into the underlying patterns. Which isn’t to say they are saving the exact text I provided in a transaction. Even so, the information provided can, in a more general sense, become part of the AI’s knowledge base. And, if that knowledge base is used to serve results to the general public? Then it is possible for the AI to “leak” confidential information that I fed into transactions.